Introduction

Deep learning, a specialized subset of machine learning, has rapidly evolved into one of the most influential technologies of the modern era. It powers a wide array of applications, from autonomous vehicles and personalized medical treatments to language translation and facial recognition. Despite its extensive use, the underlying mechanics of deep learning remain a challenge to many. At its core, deep learning is driven by neural networks that attempt to emulate the way the human brain processes information. This article provides an in depth, professional overview of deep learning, exploring how neural networks think, the mathematics behind them, and their application across various industries.

What is Deep Learning?

Deep learning refers to a class of algorithms designed to model high level abstractions in data through deep neural networks. The term deep pertains to the number of layers in the network, with deeper networks utilizing more layers to capture complex patterns. In traditional machine learning, models often rely on shallow networks with fewer layers, whereas deep learning employs multiple layers of neurons to analyze more intricate data features.

A neural network is composed of interconnected units called neurons, which are arranged in layers. These layers are responsible for transforming the input data step by step, ultimately leading to an output that can be used for tasks such as classification or regression. Neural networks learn by adjusting the weights between these neurons, refining their understanding over time as they process more data.

The Architecture of a Neural Network

A neural network typically consists of three main layers:

- Input Layer: The first layer, where raw data is introduced into the network. In an image classification task, for example, this layer receives pixel data.

- Hidden Layers: These layers contain neurons that apply various computations to the input data. The more hidden layers a network has, the deeper it is. Deep neural networks can have dozens or even hundreds of these layers, each refining the data further.

- Output Layer: The final layer generates the output of the network. In classification tasks, this might be the predicted category, while in regression tasks, it might be a continuous value.

Each neuron in a layer is connected to neurons in the previous and subsequent layers via weights. These weights determine the strength of the connection between neurons and are adjusted during training to minimize errors in the network predictions.

How Neural Networks Learn

The learning process of a neural network involves a series of steps, commonly referred to as training. The goal is for the model to adjust its weights based on the data it processes, thereby improving its accuracy over time. This process is typically carried out through the following steps:

- Forward Propagation: Data enters the input layer and is processed through the hidden layers, eventually reaching the output layer. Each neuron applies a specific function to its input, which contributes to the final prediction of the model.

- Error Calculation: After forward propagation, the network compares its prediction to the actual output. The difference between these values is calculated using a loss function, which quantifies how far off the prediction is from the true result.

- Backpropagation: Backpropagation is the process by which the error is propagated back through the network to adjust the weights of the neurons. This is done by computing the gradient of the loss function with respect to each weight and using this information to update the weights via optimization algorithms.

- Optimization: Gradient descent is the most common optimization algorithm used in neural networks. It works by adjusting the weights in the direction that minimizes the error, effectively learning from the data to improve future predictions.

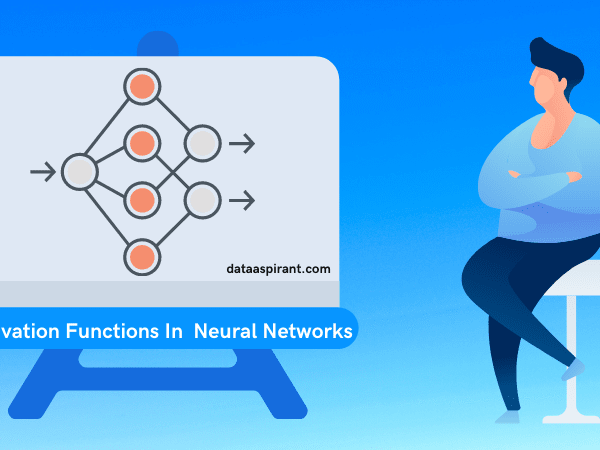

Activation Functions

The activation function is a key component of each neuron in a neural network. It introduces non linearity into the network, enabling it to learn and model complex relationships within the data. Without activation functions, the network would simply perform linear transformations, limiting its ability to learn complex patterns.

Several types of activation functions are commonly used in deep learning:

- Sigmoid: The sigmoid function maps its input to a value between 0 and 1, making it particularly useful for binary classification tasks.

- ReLU Rectified Linear Unit: ReLU outputs the input directly if it is positive and zero otherwise. It is widely used because it reduces the likelihood of the vanishing gradient problem and speeds up training.

- Tanh: The hyperbolic tangent function, or tanh, outputs values between 1 and 1, making it suitable for tasks where values are expected to be centered around zero.

- Softmax: Softmax is typically used in the output layer of multi class classification problems. It transforms the outputs of the neurons into probabilities, where the sum of all probabilities equals 1.

Types of Neural Networks

Deep learning encompasses various types of neural networks, each suited to different kinds of tasks. Some of the most widely used architectures include:

- Feedforward Neural Networks FNN: The simplest type of neural network, where data flows in one direction from input to output. FNNs are generally used for classification and regression tasks.

- Convolutional Neural Networks CNN: CNNs are specialized for image and video processing. They use convolutional layers to detect patterns such as edges, shapes, and textures, making them ideal for tasks like object recognition and facial detection.

- Recurrent Neural Networks RNN: RNNs are designed for processing sequential data, such as time series or natural language. They have loops that allow them to retain information from previous steps in the sequence, making them well suited for tasks like language modeling and speech recognition.

- Generative Adversarial Networks GANs: GANs consist of two neural networks a generator and a discriminator that compete with each other to generate realistic data. GANs are used for tasks like image synthesis and data augmentation.

- Autoencoders: Autoencoders are used primarily for unsupervised learning tasks, such as dimensionality reduction and data compression. They consist of an encoder that reduces the input data into a compact representation and a decoder that reconstructs the original data from this representation.

Training Deep Neural Networks

Training deep neural networks can be a complex process that requires careful tuning and optimization. Some of the common challenges encountered during training include:

- Vanishing Gradient Problem: In very deep networks, the gradients used in backpropagation can become extremely small, causing the network to stop learning effectively. Using activation functions like ReLU and techniques like batch normalization can help alleviate this issue.

- Overfitting: Overfitting occurs when a model learns the training data too well, including noise and outliers, which negatively impacts its ability to generalize to new data. Techniques like dropout, where random neurons are deactivated during training, and early stopping are used to prevent overfitting.

- Underfitting: Underfitting happens when the model is too simple to capture the patterns in the data. This often occurs when the model lacks sufficient complexity or is not trained long enough. Addressing underfitting typically involves using more complex models or providing more data.

- Hyperparameter Tuning: The performance of a neural network is highly dependent on hyperparameters, such as the learning rate, batch size, and number of hidden layers. Optimization techniques like grid search or random search are often used to find the best hyperparameters.

Applications of Deep Learning

Deep learning has numerous applications across a wide range of industries. Some of the most prominent applications include:

- Image and Video Recognition: Deep learning, particularly CNNs, powers image recognition technologies used in everything from medical imaging and autonomous vehicles to facial recognition and augmented reality.

- Natural Language Processing NLP: RNNs, transformers, and other deep learning models are used in NLP tasks such as machine translation, sentiment analysis, and speech recognition, enabling systems like virtual assistants and chatbots.

- Healthcare: Deep learning is revolutionizing healthcare by enabling automated diagnostic systems, drug discovery, and personalized treatment. For example, neural networks are used to analyze medical images for signs of diseases like cancer.

- Autonomous Systems: Deep learning plays a critical role in the development of self driving cars, drones, and robots, enabling them to make real time decisions based on sensor data.

- Gaming: Deep learning has been used in AI to master games such as Go and poker. Systems like AlphaGo have demonstrated superhuman abilities, providing insight into both game strategies and machine intelligence.

Challenges in Deep Learning

While deep learning holds immense potential, it also faces several challenges that researchers and practitioners must overcome:

- Data Availability: Deep learning models require large volumes of labeled data for training. Collecting and annotating data can be both time consuming and expensive.

- Computational Resources: Training deep learning models requires substantial computational power, often necessitating the use of specialized hardware such as Graphics Processing Units GPUs.

- Model Interpretability: Neural networks, particularly deep networks, are often criticized for their black box nature. The lack of transparency in decision making processes is a concern, especially in high-stakes applications like healthcare and finance.

- Ethical and Bias Concerns: Deep learning models can perpetuate biases present in the training data, leading to discriminatory or unfair outcomes. Addressing these ethical concerns is a critical area of focus for the AI community.

The Future of Deep Learning

The future of deep learning is filled with exciting possibilities. Key developments to watch include:

- Transfer Learning: Transfer learning allows models to leverage knowledge gained from one task and apply it to a different, but related, task, reducing the need for large amounts of task-specific data.

- Federated Learning: Federated learning enables decentralized model training, allowing data to remain on individual devices while the model is trained across multiple devices. This improves privacy and data security.

- Quantum Computing: Quantum computing holds the potential to accelerate deep learning algorithms, providing new ways to train models faster and handle more complex data sets.

What is deep learning?

Deep learning is a subset of machine learning that uses neural networks with many layers to model complex patterns in large datasets.

What are neural networks?

Neural networks are computational models inspired by the human brain, consisting of interconnected layers of neurons that process and learn from data.

What is the difference between deep learning and traditional machine learning?

Deep learning uses multiple layers of neurons for complex pattern recognition, whereas traditional machine learning models often use simpler, less complex structures.

What is forward propagation in deep learning?

Forward propagation is the process of passing input data through the layers of a neural network to generate an output.

What is backpropagation?

Backpropagation is a method used to update the weights of a neural network by calculating the gradient of the error and sending it back through the network to adjust the weights.

What are activation functions?

Activation functions determine whether a neuron should be activated or not based on its input. They introduce non linearity into the network, enabling it to learn complex patterns.

What is overfitting in deep learning?

Overfitting occurs when a model learns the training data too well, including noise and outliers, which hampers its ability to generalize to new data.

What are convolutional neural networks CNNs?

CNNs are a type of deep learning model specifically designed for processing grid like data, such as images, by applying convolutional filters to detect patterns.

What is the vanishing gradient problem?

The vanishing gradient problem occurs when gradients become very small as they propagate through deep networks, leading to poor training performance.

What are the applications of deep learning?

Deep learning is used in various fields, including image recognition, natural language processing, self driving cars, and healthcare diagnostics.

Conclusion

Deep learning has fundamentally changed the landscape of artificial intelligence, unlocking new possibilities across a variety of domains. While challenges remain, the field continues to evolve, and its impact is poised to grow even further. By understanding how neural networks function and learn, we can better appreciate the power and potential of deep learning to revolutionize industries, improve lives, and shape the future of technology